It has been frustrating to watch as the horrific San Bernardino terrorist killing spree has been used as a cover by the FBI to achieve the anti-encryption goals they’ve been working towards for years. Much of that frustration stems from the fact that the American media has so poorly reported the facts in this case.

The real issue in play is that the FBI wants backdoor access to any and all forms of encryption and is willing to demonize Apple in order to establish an initial precedent it can then use against all other software and hardware makers, all of whom are smaller and are far less likely to even attempt to stand up against government overreach.

However, the media has constantly echoed the FBI’s blatantly false claims that it “does not really want a backdoor,” that only cares about “just this one” phone, that all that’s really involved is “Apple’s failure to cooperate in unlocking” this single device, and that there “isn’t really any precedent that would be set.” Every thread of that tapestry is completely untrue, and even the government has now admitted this repeatedly.

Representative democracy doesn’t work if the population only gets worthless information from the fourth estate.

However, in case after case journalists have penned entertainment posing as news, including a bizarre fantasy written up by Mark Sullivan for Fast Company detailing “How Apple Could Be Punished For Defying FBI.”

A purportedly respectable polling company asked the population whether Apple should cooperate with the police in a terrorism case. But that wasn’t the issue at hand. The real issue is whether the U.S. Federal Government should act to make real encryption illegal by mandating that companies break their own security so the FBI doesn’t have to on its own.

The Government’s Anti-Encryption Charm Offensive

Last Friday, U.S. Attorney General Loretta Lynch made an appearance on The Late Show with Stephen Colbert to again insist that this is a limited case of a single device that has nothing to do with a backdoor, and that it was really an issue of the County-owned phone asking Apple for assistance in a normal customer service call.

Over the weekend, President Obama appeared at SXSW to gain support for the FBI’s case, stating outright that citizens’ expectation that encryption should actually work is “incorrect” and “absolutist.”

He actually stated that, “If your argument is ‘strong encryption no matter what, and we can and should in fact create black boxes,’ that I think does not strike the kind of balance we have lived with for 200, 300 years. And it’s fetishizing our phone above every other value, and that can’t be the right answer.”

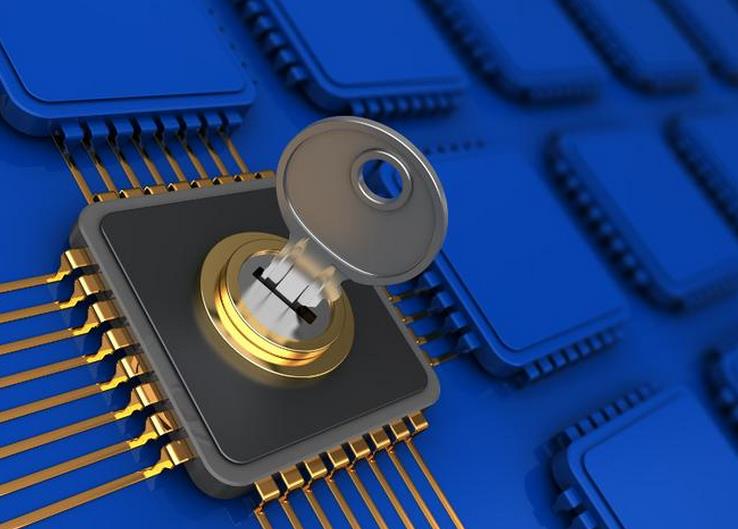

That’s simply technically incorrect. There’s no “balance” possible in the debate on encryption. Either we have access to real encryption or we don’t. It very much is an issue of absolutes. Real encryption means that the data is absolutely scrambled, the same way that a paper shredder absolutely obliterates documents. If you have a route to defeat encryption on a device or between two devices, it’s a backdoor, whether the government wants to play a deceptive word game or not.

If the government obtains a warrant, that means its has the legal authority to seize evidence. It does not mean that the agencies involved have unbridled rights to conscript unrelated parties into working on their behalf to decipher, translate or recreate any bits of data that are discovered.

If companies like Apple are forced to build security backdoors by the government to get around encryption, then those backdoors will also be available to criminals, to terrorists, to repressive regimes and to our own government agencies that have an atrocious record of protecting the security of data they collect, and in deciding what information they should be collecting in the first place.

For every example of a terrorist with collaborator contacts on his phone, or a criminal with photos of their crimes on their phone, or a child pornographer with smut on their computer, there are thousands of individuals who can be hurt by terrorists plotting an attack using backdoors to cover their tracks, or criminals stalking their victims’ actions and locations via backdoor exploits of their devices’ security, or criminal gangs distributing illicit content that steps around security barriers the same way that the police hope to step around encryption on devices.

Security is an absolutist position. You either have it or you don’t.

Obama was right in one respect. He noted that in a world with “strong, perfect encryption,” it could be that “what you’ll find is that after something really bad happens the politics of this will swing and it will become sloppy and rushed. And it will go through Congress in ways that have not been thought through. And then you really will have a danger to our civil liberties because the disengaged or taken a position that is not sustainable.”

However, the real answer to avoiding “sloppy, rushed” panic-driven legislation is to instead establish clear rights for citizens and their companies to create and use secure tools, even if there is some fear that secure devices may be used in a way that prevents police from gaining access to some the evidence they might like to access in certain cases.

The United States makes no effort to abridge the use of weapons like those used in San Bernardino to actually commit the atrocity. It should similarly not insist that American encryption should only work with a backdoor open on the side, giving police full access to any data they might want.

It’s not just a bad idea, it’s one that will accomplish nothing because anyone nefarious who wants to hide their data from the police can simply use non-American encryption products that the FBI, the president and the U.S. Congress have no ability to weaken, regardless of how much easier it would make things for police.